Open Journal of Mathematical Analysis

ISSN: 2616-8111 (Online) 2616-8103 (Print)

DOI: 10.30538/psrp-oma2020.0055

Modeling the movement of particles in tilings by Markov chains

Department of Mathematics and Physics, Institut Supérieur Pédagogique de Bukavu, Democratic Republic of the Congo.; (Z.B)

Department of Mathematics, University of the Free State, South Africa.; (A.R)

Department of Mathematical Sciences, St. Johns University of Tanzania, Tanzania.; (M.S)

\(^1\)Corresponding Author: dieudonne.z.balike@aims-senegal.org

Abstract

Keywords:

1. Introduction

Living organisms consist of one or more tiny components of several types and shapes termed cells on which molecules move in continuous random motion. These cells and the molecules can be considered as subdivisions of a 2-dimensional plane on which particles randomly move. The plane can be much wider, but considering that each molecule moving on the plane has a starting cell, we can restrict this movement to a few groups of cells. The knowledge obtained from this small group of cells can be extended to improve our understanding of the molecules movement on a larger scale. The shape of the cells dictates the different random possibilities of a molecule movement to neighboring cells from the starting cell.

A cell can assume different shapes, including square and hexagonal shapes. In both square and hexagonal tilings assumed by a cell, the set of all possibilities of a molecule moving towards a neighboring cell can be seen as a Markov chain \(\{X_t , t > 0\}\) [1]. This Markov chain is driven by a parameter \(p\) which represents the probability for the molecule under study to move from one cell to a neighboring cell. A Markov chain can be discrete or continuous depending on whether the time considered is discrete or continuous [2].

A recent study made in [1] on this topic considered discrete time process. It was demonstrated that the molecule is faster in the hexagonal tiling than in the square tiling.

In this paper, we will look at the continuous process and compare the result with those found in the discrete process. We will examine how the probability impacts the movement of a molecule from cell \(i\) to cell \(j\). When a molecule moves from a cell \(i\) to \(j\), the possible next step of the movement depends on the number of cells enclosing it. For example, from the central cell of the square tiling , a molecule has four possibilities to move to while there are six possibilities in the hexagonal tiling.

In the aforementioned study ([1]), two starting positions were considered: the central cell and the surrounding ones. We only consider the central cell to be the starting position of the molecule since each cell (even the border cells) can be considered as central by enlarging the plane.

Infinitesimal generators in continuous time will replace the transition matrices in discrete time to describe the movement of the molecule. In this study, the space is discrete.

Sometimes, the transition matrices can be very large and almost impossible to handle for doing computations. In order to reduce the calculations, we will use the state symmetries after identifying the non-equivalent cells in each tiling, then we will lump states with ²the same properties [3]. Symmetric groups afford a precise definition of structural equivalence for Markov chains states in aggregating them to making a partition of the original Markov process in small subsets that conserve all the previous properties [4]. This aggregation results in a new Markov chain (aggregated chain) with fewer number of states such that the finite probabilities of aggregated states equals the finite probabilities of the corresponding states of the initial Markov chain [5].

The specific questions we want to address include:- (1) What is the effect of the discrete or continuous nature of time in the oscillatory movement of the molecule?

- (2) What is the effect of the probability, the time and the shape of the tiling in the attainment of the equilibrium in continuous Markov process under consideration ?

2. Markov chains

2.1. Definitions

Definition 1. A sequence of random variables \(\{X_n\}_{n_{\geq 0}}\) in a countable space E is called stochastic process. E is called states space whose elements will be written \textit{i, j, k, ...}.

When \(X_n = i\), the process is in the state \(i\) or visits the state \(i\) at the time \(n\). Sequences of random variables which are independent and identically distributed are stochastic process but they do not take into account the dynamic of evolution of systems due to their independence. To introduce this dynamic, one must take into account the influence of the past, which Markov chains do, like the equation of recurrence in deterministic systems [2]. Then we introduce the following:Definition 2. For all \(n\in \mathbb{N}\) and all states \(i_0 , i_1, i_2, i_3,...,i_{n-1},i,j \in E\),

Since all \(p_{ij}\) are probabilities and the transition happens from a state \(i\) to a state \(j\), one has \(p_{ij}\geq 0\) and \[\sum_{k\in E} p_{ik}=1,~~ \forall i,j.\] A matrix indexed by E and satisfying the above properties is a stochastic matrix.

A Markov chain is said to be \textit{discrete time} if the state space of the possible outcomes of the process is finite.

2.2. Continuous-time Markov chains

Definition 3. A continuous-time Markov chain \(X(t)\) is defined by two components: a jump chain, and a set of holding time parameters \(\lambda_i.\) The jump chain consists of a countable set of states \(S\subset\{0,1,1,...\}\) along with transition probabilities \(p_{ij}\). We assume \(p_{ii}=0\), for all non-absorbing states \(i\in S\). We assume that:

- 1) If \(X(t)=i\), the time until the state changes has exponential \((\lambda_i)\) distribution;

- 2) If \(X(t)=j\), the next state will be in \(j\) with probability \(p_{ij}\).

Assuming the states are \(1, 2,..., r\), then the state transition matrix for any \(t\geq 0\) is given by

The following definition from [9] is important for the suit of this paper.

Definition 4. Let \(\{X_t\}\) be a Markov chain with state space \(S=\{1,2,\cdots,r\}\) and initial vector \(\pi\). Given a partition \(\bar{S}=\{E_1, E_2, \cdots, E_v\}\) of the space \(S\), a new chain \(\bar{X}_n\) can be defined as follows: At the \(jth\) step, the state of a new chain is the set \(E_k\) when \(E_k\) contains the state of the \(jth\) step of the original chain.

Precisely, a continuous Markov chain is said to be lumpable with respect to the partition \(\bar{S}\) if for \(i,j \in E_\eta\),Theorem 1. Let X(t) be an irreducible continuous-time Markov chain with stationary distribution \(\pi\). If it is lumpable with respect to a partition of the state space, then the lumped chain also has a stationary distribution \(\bar{\pi}\) whose components can be obtained from \(\pi\) by adding corresponding components in the same cell of partition.

Infinitesimal Generator of Continuous-time Markov chains

The infinitesimal generator matrix, usually shown by G, gives us an alternative way of analyzing continuous-time Markov chains. Consider a continuous-time Markov chain \(X(t)\). Assume \(X(0)=i\). The chain will jump to the next state at time \(T_1\), where \(T_1\sim Exponential(\lambda_i)\). In particular, for a very small \(\delta \geq 0\), we can write \begin{equation*} P(T_1 \leq \delta)=1-e^{-\lambda_i \delta}\simeq 1-(1-e^{-\lambda_i \delta}) =\lambda_i\delta. \end{equation*} Thus, in a short interval of length, \(\delta\) the probability of leaving state \(i\) is approximately \(\lambda_i \delta\). For this reason, \(\lambda_i\) is often called the transition rate out of state \(i\). Formally, we can writeDefinition 6. For a continuous-time Markov chain, we define the generator matrix G. The (i,j)th entry of the transition matrix is given by

Definition 6. We say that an infinitesimal generator G is lumpable if

First hitting times

Definition 7. Let (\(X_t)_{t\ge 0}\) be a Markov chain with generator matrix G. The hitting time of a subset A\(\subset \)S is the random variable $$\tau^A (\omega )=inf\{t\ge 0|X_t(\omega)\in A\}$$ with the usual convention \(inf\emptyset =\infty\).

Theorem 2. The vector of mean hitting times \(k^A=\{ k_i^A|i\in S\}\) is the minimal nonnegative solution of

3. Investigation of the movement on small tiling in continuous time

In this section we investigate the motion of a molecule in two small tilings: the square tiling and the hexagonal one. This movement from a cell \(i\) to a cell \(j\) is considered as being an homogeneous Markov chain. States with the same stochastic behavior are lumped together using symmetries of states deduced from groups acting on the cellular complexes. According to [12], the group acting on a polygon is a dihedral group. In the particular case of the small square tiling, we have the symmetric group \(S_9\) and for the hexagonal tiling we have \(S_7\). Thanks to these groups, we will use the technique of lumpability. This lumpability is effective in forming new chains from the old ones without losing the primitive properties and simplifying tedious calculations.At each step, the molecule is supposed to leave the central cell and move into the surrounding cells. In [1], it is shown that the movement of biological molecule on tilings (either square or hexagonal) can be modeled by a (discrete time) Markov chain. We will extend this movement of biological molecule on small tiling in continuous time.

3.1. Continuous-time process in small square tilng

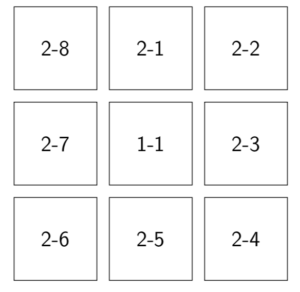

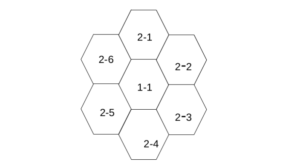

We already have important results from previous works on discrete-time Markov process in small cell complexes ([1]). We want to extend this study to the continuous case especially in the square tiling. We will assume a discrete space throughout this study.Figure 1. Small square tiling.

3.1.1. Infinitesimal generator and probability matrix

The molecule has four possibilities of moving to neighboring state with, assume, probability \(p\). All cells can be reached in one step from the center except those located at the corner (corner cells) of the tiling. Therefore, the infinitesimal generator, \(G\) for a square tiling takes the formWe now compute the probability matrix \(P(t)\) defined by Equation (3) by using the Chapman-Kolmogorov backward equation (see Equation (5)).

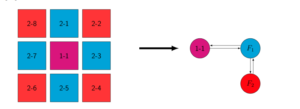

A direct computation of Equation (5) will be tedious because of the size of Equation (12). We therefore lump the symmetric states as depicted on Figure 2. This figure shows that the symmetric group \(S_9\) is a partition of the proposed Markov chain.

Figure 2. Lumpability of small square tiling

The new infinitesimal generator is obtained from

3.1.2 Stationary distribution and limiting probability

A stationary distribution of a Markov chain is a probability distribution that remains unchanged in the Markov chain as time progresses. Typically, it is represented as a row vector \(\pi\) whose entries are probabilities summing to \(1\) and, given the transition matrix \(P\), it satisfies the Equation (4). It can be shown (see [14])that the Equation (4) is equivalent toDefinition 8. The limiting distribution of a Markov chain seeks to describe how the process behaves a long time after.

For the limiting distribution to exist, the following limit must exist for any states \(i\) and \(j\)For time-homogeneous Markov chains, any limiting distribution is a stationary distribution [15]. The relation Equation (28) applied on the matrix Equation (24) provides the following matrix: $$ \mathbf{P}_{\pi}=\begin{pmatrix} \frac{1}{9}&\frac{4}{9} & \frac{4}{9} \\ \frac{1}{9}&\frac{4}{9} & \frac{4}{9} \\ \frac{1}{9}& \frac{4}{9} & \frac{4}{9} \end{pmatrix}, $$ which is the limiting distribution of the Markov chain deduced from the square tiling. It is a stochastic matrix. It satisfies the Equation (29) as expected.

3.1.3. Calculation of the mean hitting times

In this section, we want to compute the mean value of the time to be spent by the molecule in a cell for the first time by using the Equation (11).

For \(A=\{1\}\), we have the following system:

\begin{equation*}

\left\lbrace \begin{array}{rrr}

k_1^A &=&0,\\

g_{21}k_1^A +g_{22}k_2^A +g_{23}k_3^A &= &-1,\\

g_{31}k_1^A +g_{32}k_2^A +g_{33}k_3^A &=&-1,\\

\end{array}

\right.

\end{equation*}

whose solution is \( \begin{pmatrix}

0 \\

\\

\frac{2}{\alpha} \\

\\

\frac{5}{2\alpha}

\end{pmatrix} \) after substituting all the \(g_{ij}\) with their corresponding values in Equation (14). In the same way, we respectively have for \(A=\{2\}\) and \(A=\{3\}\) the following vectors:

\( \begin{pmatrix}

\frac{1}{4\alpha} \\

\\

0 \\

\\

\frac{1}{2\alpha}

\end{pmatrix} \) and \( \begin{pmatrix}

\frac{7}{8\alpha} \\

\\

\frac{5}{8\alpha} \\

\\

0

\end{pmatrix}. \)

The undermentioned matrix H summarizes the findings for the mean hitting times:

\begin{equation}

H= \begin{pmatrix}

0 & \frac{1}{4\alpha} & \frac{7}{8\alpha} \\

& & \\

\frac{2}{\alpha} & 0 & \frac{5}{8\alpha} \\

& & \\

\frac{5}{2\alpha} & \frac{1}{2\alpha} & 0

\end{pmatrix}.

\label{mean hitting time square}

\end{equation}

(30)

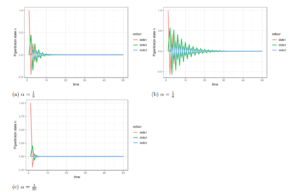

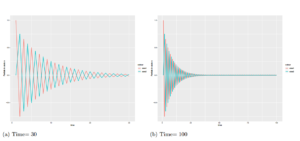

3.1.5. R simulation of effect of probability and time on the movement in square tiling

Figure 3 and 4 represent the transition rate against time. The Figure 3, in particular, shows how the variation in the transition rates affects the attainment of the equilibrium. By comparing graph 3a and graph 3c, we can see that the variation in the \(\alpha\) parameter value affects the oscillation of the state curves. This means that the variation of the transition rates influences the attainment of the equilibrium. We can notice that on the graph 3c where the probability value is the smallest, the equilibrium state is reached quicker than on the other two graphs of the same figure.

Figure 3. Visualization of the effect of the variation of the transition rates for a fixed time (time=50) in a small square tiling

For example, if we choose the second as unit of time, we can note that from graph Figure 4a starts the equilibrium phase almost at the eighth second. Considering a larger interval of time (as 100 at Figure 4b or Figure 4c), the equilibrium attainment time is still the fifteenth second. It can be seen that the starting state curve is less steep on Figure 4a where the time is 30 than in graph 4c where the time is 150.

Figure 4. Visualization of the effect of the variation of time for a fixed transition rate (\(\alpha=\frac{1}{8}\)) in a square tiling

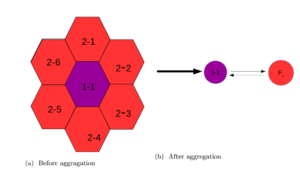

4. Continuous-time process in small hexagonal tiling

In this section, we examine how some parameters influence the behavior of the motion in the hexagonal tiling. We will consider the aggregated complex cell for reducing the computations. The unique starting position is the central cell 1-1.4.1. Infinitesimal generator and probability matrix

Let us consider the small hexagon depicted on Equation (5). From the central cell, the molecule (the system) has six possible equiprobable destinations which are its neighboring cells. Based on this information, we then produce the following infinitesimal generatorFigure 5. Small hexagonal tiling

Figure 6. Lumpability of states in small hexagonal tiling

4.2. Stationary distribution and limiting probability

The stationary distribution of the lumped chain is the vector \(\pi(\pi_1, \pi_2)\) such that4.3. Calculation of the mean hitting time

It is easy to check that the matrix of the mean hitting time for the movement of the particle in the hexagonal tiling is4.4. Simulation of the effects of probability and time on the movement

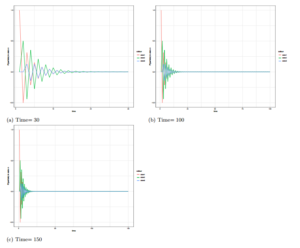

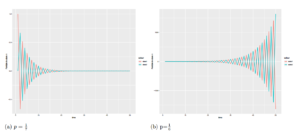

Figure 7 and Figure 8 plot the impact of the probability and the time on the attainment of the equilibrium in a hexagonal tiling when we consider continuous time.Figure 7. Simulation of effect of variation of time on the attainment of the equilibrium for fixed probability(transition rate \(\alpha=\frac{1}{8}\))

Figure 8. Simulation of effect of variation of probability on the attainment of the equilibrium for fixed time

The collection of graphs illustrated on Figure 7 and Figure 8 depicts how fast the molecule reaches the equilibrium in the hexagonal tiling in continuous time. The main factor which affects the attainment of the equilibrium is the transition rate. The transition rate \(\alpha=\frac{1}{6}\) is a critical value which affects particularly the motion of the molecule in the hexagonal tiling. For this value, if the molecule quits the central cell, it will never come back into it. A quick substitution in Equation (35) and a glance on Figure 8b can allow to verify it.

5. Discussion of results and conclusion

Under continuous-time conditions, we have checked the same results. In fact, Equation (30) and Equation (35) show that the average transition time from state 1 to state 2 is greater in the square tiling than in the hexagonal tiling. A glance at the panels of graphs depicted above shows that the greater the probability (transition rate), the later the equilibrium is reached in both square and hexagonal tilings. However, when comparing the movement in both tilings, we realize that the equilibrium is quickly reached in hexagonal tiling than in the square one. Increasing the value of the transition rate leads to a quick or late attainment of the equilibrium.

In this paper, the movement of a molecule in two kinds of tilings has been studied: the square tiling and the hexagonal one. Its has been established that only two parameters, among the four considered, have an impact on the quick or late attainment of the equilibrium. The parameters under consideration in this study were the nature of the time (discrete or continuous), the probability (so called transition rate), the time and the shape of the tiling.

In [1], the movements of the molecules in the tilings were modelled using discrete-time Markov chains. It was established that this motion reaches the equilibrium point faster in the hexagonal tiling than in the square one. This same finding is established in continuous-time Markov chains. It is to be deduced that the nature of time does not have an impact on reaching the equilibrium point.However, the shape of the tiling is a core parameter for the attainment of the equilibrium. That is, the molecule is faster in hexagonal tiling than in the square one.

Another important parameter is the transition rate in the infinitesimal generator. During this study, it has been demonstrated that for both hexagonal and square tilings, the rapidity to attain the equilibrium depends also upon the transition rate under consideration. Hence, the smaller the transition rate, the faster the molecule is, in reaching the equilibrium position and vice versa. To put it in a nutshell, this study has proven the influence of transition rate and the shape of the tiling were important for the rapidity of the movement. Other parameters do not have considerable impact on the movement.

Author Contributions

All authors contributed equally to the writing of this paper. All authors read and approved the final manuscript.Competing Interests

The author(s) do not have any competing interests in the manuscript.References

- Saiguran, M., Ring, A., & Ibrahim, A. (2019). Evaluation of Markov chains to describe movements on tiling. Open Journal of Mathematical Sciences, 3(1), 358-381.[Google Scholor]

- Brémaud, P. (2009). Initiation aux Probabilités: et aux chaînes de Markov. Springer Science & Business Media.[Google Scholor]

- Ring, A. (2004). State symmetries in matrices and vectors on finite state spaces. arXiv preprint math/0409264.[Google Scholor]

- Barr, D. R., & Thomas, M. U. (1977). An eigenvector condition for Markov chain lumpability. Operations Research, 25(6), 1028-1031.[Google Scholor]

- Benec, V. E. (1978). Reduction of network states under symmetries. Bell System Technical Journal, 57(1), 111-149.[Google Scholor]

- Nagle, R. K., Saff, E. B., Snider, A. D., & West, B. (1996). Fundamentals of differential equations and boundary value problems. Reading: Addison-Wesley, Pearson.[Google Scholor]

- Whitt, W. (2013). Continuous-time Markov chains. Department of Industrial Engineering and Operations Research, Columbia University, New York, December 2013.[Google Scholor]

- Kemeny, J. G., & Snell, J. L. (1976). Finite markov chains. Undergraduate Texts in Mathematics.[Google Scholor]

- Tian, J. P., & Kannan, D. (2006). Lumpability and commutativity of Markov processes. Stochastic analysis and Applications, 24(3), 685-702.[Google Scholor]

- Yin, G. G., & Zhang, Q. (2012). Continuous-time Markov chains and applications: a two-time-scale approach (Vol. 37). Springer Science & Business Media, New York.[Google Scholor]

- Cameron, M., & Gan, T. (2016). A graph-algorithmic approach for the study of metastability in markov chains. arXiv preprint arXiv:1607.00078.[Google Scholor]

- https://en.wikipedia.org/wiki/Dihedral\_group, 20/01/2020

- Meyn, S. P., & Tweedie, R. L. (2012). Markov chains and stochastic stability. Springer Science & Business Media.[Google Scholor]

- Levin, D. A., & Peres, Y. (2017). Markov chains and mixing times (Vol. 107). American Mathematical Socity.[Google Scholor]

- Schuette, C., & Metzner, P. (2009). Markov chains and Jump Processes. An Introduction to Markov and Jump Processes on Countable States Spaces. Freie Universit Berlin, Berlin.[Google Scholor]