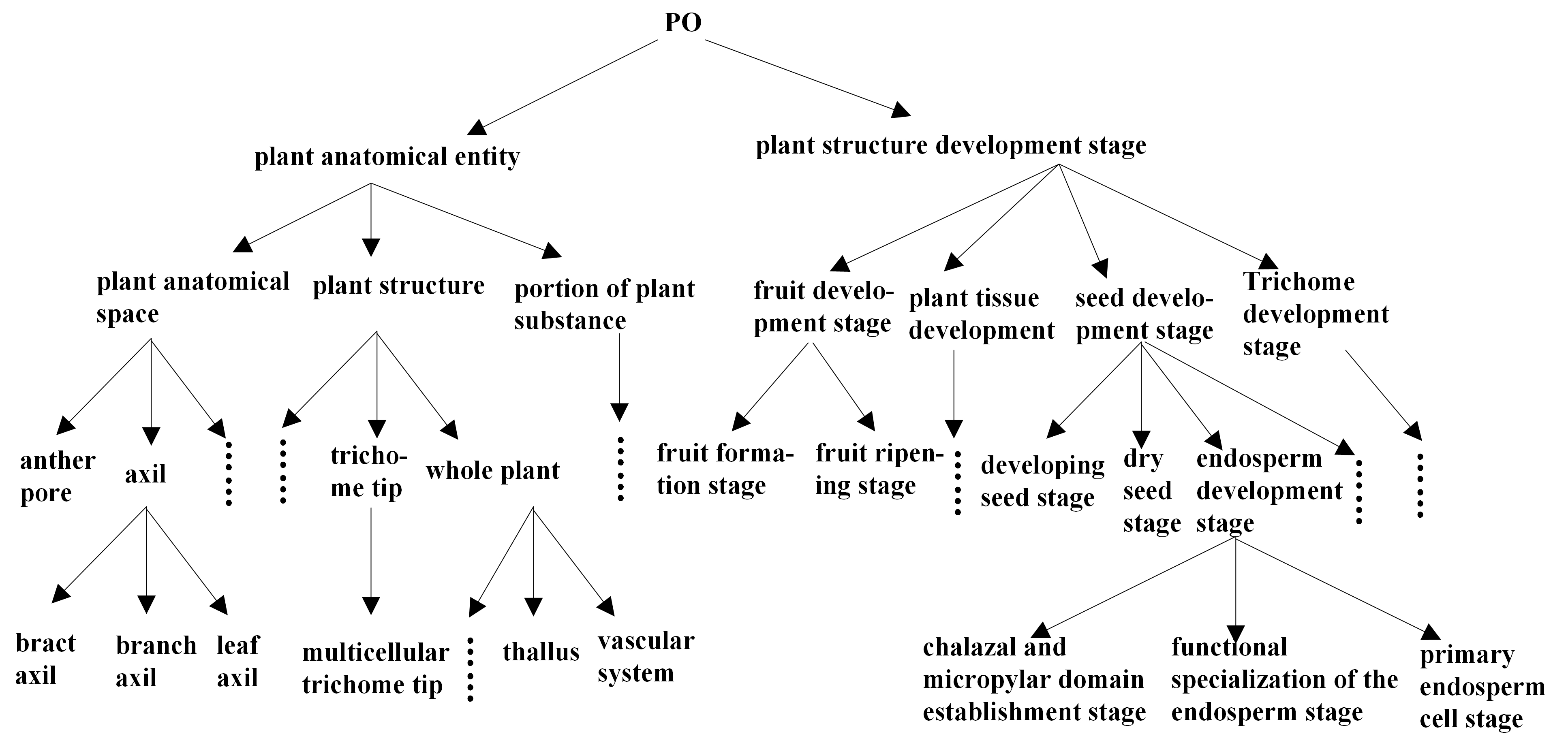

With the extensive application of ontology in the fields of information retrieval and artificial intelligence, the ontology-based conceptual similarity calculation becomes a hot topic in ontology research. The essence of ontology learning is to obtain the ontology function through the learning of ontology samples, so as to map the vertices in each ontology graph into real numbers, and finally determine the similarity between corresponding concepts by the difference between real numbers. The essence of ontology mapping is to calculate concepts from different ontologies. In this paper, we introduce new ontology similarity computing in view of stochastic primal dual coordinate method, and two experiments show the effectiveness of our proposed ontology algorithm.

Ontologies play an increasingly important role in the fields of software engineering, artificial intelligence, information retrieval, and Web services. According to the degree of domain dependence, the ontology can be divided into Top Ontology, Domain Ontology, Task Ontology and Application Ontology. As a kind of description framework and standard specification of knowledge, it is the representation of related concepts and the relationship between concepts. It selects general terms and concepts in the fields described, and uses ontology language to define and describe. Standard universal unambiguous domain ontology. Domain ontology is a tool for knowledge exchange and sharing between designers and systems. It facilitates the processing of structured knowledge and supports semantic operations. It has been widely used in knowledge management and data mining. The introduction of ontology concept promotes the integration of traditional information retrieval mechanism and semantic content, which can effectively improve the search efficiency and search accuracy of knowledge. Several algorithms and related results on ontology can refer to Wang et al. [1], Cheng et al. [2], Peng et al. [3], Zhang et al. [4], Zhang et al. [5], Zhang et al. [6], Xue and Pan [7], Zhong et al. [8], Jiang et al. [9], and Liang [10].

With the advent of the era of big data, the ontology needs to deal with not only text concepts, but also images, audio and video. Therefore, machine learning algorithms have been widely used in ontology similarity calculations in recent years. A classic method is to learn the ontology function through ontology sample set, this ontology function maps the whole ontology graph to a one-dimensional real number, and each ontology vertex corresponds to a real number. The similarity of the concepts is judged by comparing the distances between the real numbers. Since the information of each ontology vertex is expressed as a \(p\)-dimensional vector, the ontology function can be regarded as a map \(f: \Bbb R^{p}\to \Bbb R\). In this paper, we present an ontology learning algorithm for ontology similarity measuring and ontology mapping by means of stochastic primal dual coordinate trick.

First, we use a \(p\)-dimensional vector to express all the information of a vertex \(v\) (corresponding to a concept). For convenience, we use \(v\) to denote vertex, vector and concept as well. Assume that \(\{v_{i}\}_{i=1}^{n}\) is the ontology sample set, \(n\) is the capacity of ontology sample, \(\{l_{i}\}_{i=1}^{n}\) are the ontology loss function, \(\beta\) is the ontology sparse vector, and \(g\) is the punish function. In this way, to learn an optimal ontology function can be transform to learn an ontology sparse vector \(\beta\), and generally the optimization model can be formulated as

Let \({\bf V}\in\Bbb R^{n\times p}\) be the ontology information matrix which is denoted as \({\bf V}=[v_{1},\cdots,v_{n}]^{T}\), and its \(j\)-th column is denoted as \({\bf V}_{:j}\). Let \(\{l_{i}^{*}\}_{i=1}^{n}\) be the convex conjugate function of \(\{l_{i}\}_{i=1}^{n}\), and \(g^{*}\) be the convex conjugate function of \(g\). Then, the dual problem of ontology problem (1) can be written as

and \(p_{i}=0\) otherwise. Then, the above stochastic primal dual coordinate based ontology implementation can be modified as follows. Input mini-batch size \(a\), \(\beta^{0}\), and \(\alpha^{0}\); for \(t=1,2,\cdots\) to converged do: \(\alpha_{i}^{t}\gets\) update(\(\alpha_{i}^{t-1}\)) (for \(i\in\{1,\cdots,n\}\)), \(\beta^{t}\gets\) update(\(\beta^{t-1}\)), determine \(\kappa^{t}\) and \(p\) using (4), \((\widehat{\beta}^{0},\widehat{\alpha}^{0})\gets (\beta^{t},\alpha^{t})\), after the inner loop do \((\beta^{t},\alpha^{t})\gets(\widehat{\beta}^{u},\widehat{\alpha}^{u})\) if \(P_{\lambda}(\widehat{\beta}^{u})-D_{\lambda}(\widehat{\alpha}^{u})< P_{\lambda}(\beta^{t})-D_{\lambda}(\alpha^{t})\); for inner loop \(u=1,\cdots,\lceil\frac{n}{a}\rceil\), randomly pick up a subset indices \(K\), \(\widehat{\alpha}_{i}^{u}\gets\) update(\(\widehat{\alpha}_{i}^{u-1}\)) if \(i\in K\), and \(\widehat{\beta}^{u}\gets\) update(\(\widehat{\beta}^{u-1}\)).

By setting \(\psi_{j}^{t}=|\beta_{j}^{t}-\nabla g_{j}^{*}(\frac{{\bf V}_{:j}^{T}\alpha^{t}}{n\lambda})|\), the the above stochastic primal dual coordinate based ontology implementation can be further modified as follows. Input mini-batch size \(a\), \(\beta^{0}\), and \(\alpha^{0}\); for \(t=1,2,\cdots\) to converged do: \(\alpha_{i}^{t}\gets\) update(\(\alpha_{i}^{t-1}\)) (for \(i\in\{1,\cdots,n\}\)), \(\beta^{t}\gets\) update(\(\beta^{t-1}\)), determine \(\kappa^{t}\), \(\psi_{j}^{t}\) and \(p\) using (4), \((\widehat{\beta}^{0},\widehat{\alpha}^{0})\gets (\beta^{t},\alpha^{t})\), after the inner loop do \((\beta^{t},\alpha^{t})\gets(\widehat{\beta}^{u},\widehat{\alpha}^{u})\) if \(P_{\lambda}(\widehat{\beta}^{u})-D_{\lambda}(\widehat{\alpha}^{u})< P_{\lambda}(\beta^{t})-D_{\lambda}(\alpha^{t})\); for inner loop \(u=1,\cdots,\lceil\frac{n}{a}\rceil\), randomly pick up a subset indices \(K\), \(\widehat{\alpha}_{i}^{u}\gets\) update(\(\widehat{\alpha}_{i}^{u-1}\)) if \(i\in K\), and \(\widehat{\beta}_{j}^{u}\gets\) update(\(\widehat{\beta}_{j}^{u-1}\)) for \(j\in\{m\in\{1,\cdots,p\},\psi_{m}^{t}\ne0\}\).

At last, one remark is that if we randomly pick up a subset \(M_{h}\) follows the probability vector \(q\), then the iterative process presented in last section can be re-written as follows. First, input dual mini-batch size \(a\), primal mini-batch size \(b\), \(\beta^{0}\), \(\alpha^{0}\) as initial solutions, \(\overline{\beta}^{0}\gets\beta^{0}\), \(\overline{\alpha}^{0}\gets\alpha^{0}\). Partition the indices of primal variables into \(p\) mod b mini-batches whose the number of elements are \(b+1\) and (\(\lfloor\frac{p}{b}\rfloor-p\) mod \(b\)) mini-batches whose the number of elements are \(b\). For \(t=1,2,\cdots\) to converged do: Generate random index from sampling probability \(p\) \(a\) times with replacement (denote \(K\) as the set of random indices); randomly pick up a subset \(M_{h}\) follows the probability vector \(q\); update the dual and the primal coordinates \(\alpha_{i}^{t+1}={\rm argmax}_{{\bf w}\in\Bbb R}\{-{\bf w}-l_{i}^{*}(-{\bf w})-\frac{np_{i}}{2\sigma_{i}}({\bf w}-\alpha_{i}^{t})^{2}\}\) if \(i\in K\) (otherwise, \(\alpha_{i}^{t+1}=\alpha_{i}^{t}\)), \(\overline{\alpha}_{i}^{t+1}=\alpha_{i}^{t}+\frac{(\alpha_{i}^{t+1}-\alpha_{i}^{t})}{ap_{i}n}\) for \(i\in\{1,\cdots,n\}\), \(\beta_{j}^{t+1}={\rm argmin}_{x\in\Bbb R}\{\lambda g(x)-\frac{x}{n}+\frac{q M_{h} p}{2\tau|M_{h}|}(x-\beta_{j}^{t})^{2}\}\) if \(j\in M_{h}\) (otherwise, \(\beta_{j}^{t+1}=\beta_{j}^{t}\)), and \(\overline{\beta}^{t+1}=\beta^{t+1}+\theta(\beta^{t+1}-\beta^{t})\).

| \(P\)@3 average | \(P\)@5 average | \(P\)@10 average | |

|---|---|---|---|

| precision ratio | precision ratio | precision ratio | |

| Algorithm in our paper | 0.4892 | 0.5739 | 0.7103 |

| Algorithm in [12] | 0.4549 | 0.5117 | 0.5859 |

| Algorithm in [13] | 0.4282 | 0.4849 | 0.5632 |

| Algorithm in [14] | 0.4831 | 0.5635 | 0.6871 |

When \(N\)= 3, 5, or 10, the precision ratio obtained by the proposed algorithm is higher than those determined by the algorithms in [12], [13] and [14]. In particular, the precision ratios of those algorithms increase significantly as \(N\) increases. It can be concluded that the algorithm described in our paper is superior to those proposed in [12], [13] and [14].

| \(P\)@1 average | \(P\)@3 average | \(P\)@5 average | |

|---|---|---|---|

| precision ratio | precision ratio | precision ratio | |

| Algorithm in our paper | 0.2778 | 0.5000 | 0.5667 |

| Algorithm in [15] | 0.2778 | 0.4815 | 0.5444 |

| Algorithm in [13] | 0.2222 | 0.4074 | 0.4889 |

| Algorithm in [14] | 0.2778 | 0.4630 | 0.5333 |